Understanding Robots.txt

Robots.txt is a tool provided by search engines to control website crawling and scanning by web robots, which may either encompass the entirety or parts of the web. These robots play a critical role in determining whether web pages are promptly indexed or not indexed at all by various search engines such as Google, Bing, Yandex, and others.

Pocedua on How To Configuring Custom Robots.txt in Blogspot:

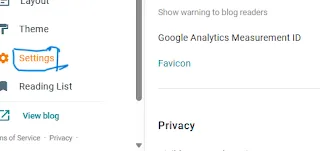

1 To begin, sign in to your blog's dashboard

3Go to Browsing Preferences (Browsing Preferences)

4. In custom Robots.txt, select Edit

5 Key in the following commands:

User-agent: Media partners-Google

Disallow:

User-agent: *

Disallow: /search

Allow: /

Sitemap:https://ebaidghpage.blogspot.com/sitemap.xml

It's important to replace the highlighted URL with your website's name.